In this new blog article we dig deeper into the use of the “don’t know” and “no opinion” (“DK/NO”) answer options in closed questions. After all, there is some debate in the scientific literature on whether to include or omit them. After extensively discussing the pros and cons of adding/omitting these answer options, we will provide you with some recommendations on how to deal with these answer options.

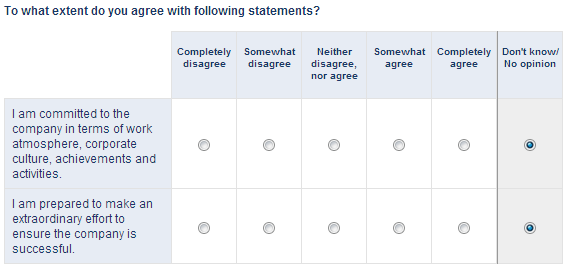

First things first. What do we mean by the “DK/NO” answer options? The idea behind them is very simple. It allows respondents to indicate that they do not know the answer to the question or do not have an opinion on a particular issue (see figure below).

1. Pros and Cons of adding the “DK/NO”

Now that we clarified what we understand by “DK/NO”, we can start discussing the pros and cons of this answer option.

One of the main advantages of adding a “DK/NO” is that it reduces noise in the data. In other words, if you would omit the “DK/NO”, respondents that do not know the answer to the question or respondents without a strong opinion would nonetheless be forced to choose an opinion (aka non-attitude reporting). This creates noise in the results. Adding a “DK/NO” reduces this noise.

However the idea of adding a “DK/NO” in order to minimise non-attitude reporting is based on two assumptions (Krosnick, et al., 2002). First of all, there are only two types of respondents, i.e. a) respondents that have opinions on any given issue and are aware of possessing those opinions and b) respondents that do not have opinions on any given issue and are equally aware of this. I.e. respondent with a fair amount of self-knowledge. Second, respondents are presumed to act rationally.

As a result, the first type of respondents are supposed to report their opinions, regardless of a “DK/NO” being included or omitted. The second type are then presumed to choose the “DK/NO” option when it is offered. As we already discussed, this type of respondents will most likely fabricate an opinion in order to appear opinionated when there isn’t a “DK/NO” offered. In order to avoid this fabrication, several survey researchers advocate for adding a “DK/NO”.

However, there appears to be a fundamental flaw in these assumptions. After all, it is assumed that a “DK/NO” only attracts those respondents that truly do not know the answer to the question or do not have an opinion on the surveyed topic. Yet, you can never be 100% certain that an opinionated respondent will not opt for the “DK/NO” option. As a result, the initial assumptions do not hold. That is why it is argued that you should not automatically presume that every time a respondent chooses for a “DK/NO”, (s)he cannot report a meaningful response.

So why would an opinionated respondent opt for the “DK/NO”, rather than expressing his/her true opinion? In the scientific literature various arguments are being put forward. First, respondents will opt for the “DK/NO” if they are not fully certain of the meaning of a question (e.g. Feick, 1989). Second, to avoid thinking and/or committing themselves (e.g. Oppenheim, 1992). Third, when the survey exceeds their motivation or their ability (Krosnick, 1991).

These final two arguments represent what Krosnick (1991) calls the ‘Theory of Survey Satisficing’. It is argued that answering attitudinal survey questions entails cognitive work for the respondents. It is not easy to answer these questions as respondents have to think about them. After all, respondents have to create a judgment on an attitudinal question and translate this judgment into a response (e.g. Schwarz & Bonner, 2001). When the amount of cognitive work exceeds the respondent’s motivation or ability, they will start looking for ways to avoid the workload while still appearing as if they are carrying on with the survey. A simple way of avoiding this cognitive workload in a survey is opting for the “DK/NO”.

That is why adherents of the ‘Theory of Survey Satisficing’ argue in favour of omitting the “DK/NO”. As such, so-called ‘satisificors’ do not have the opportunity to skip the hard work by choosing the “DK/NO”.

In recent years, scientific consensus has shifted towards omitting the “DK/NO”. For instance Krosnick et al. (2002) found that the inclusion of a “DK/NO” did not reliably improve the quality of the data. Instead it is possible that respondents attracted by the “DK/NO” would have provided substantive answers if there was not a “DK/NO” offered. The authors even conclude: “Analyses at the aggregate level suggest that many or even most respondents who choose an explicitly offered no-opinion response option may have meaningful attitudes, but we cannot rule out the possibility that some people do so because they truly do not have attitudes.”

2. Recommendations

Now that we learnt the pros and cons of the “DK/NO”, should you offer or omit this answer option?

As so often, it depends.

It depends on whether you can afford to waste some potential useful data. After all, gathering survey data is rather expensive. It requires a significant investment in time and money. As a result, following the ‘Theory of Survey Satisficing’, researchers that offer a “DK/NO” may be wasting potentially useful responses from people that otherwise would have opted for a substantial answer.

It depends on the nature of the questions and the profile of the respondents. It makes intuitively more sense to add a “DK/NO” for a factual question, rather than an attitudinal one. Ditto, if you are surveying an audience that has every possibility of not knowing the answer. For instance, if you are asking your customers to rate your helpdesk, it makes sense to add a “DK/NO” if you are not 100% sure that every customer has had contact with the helpdesk. However, it does not when you are surveying high school students on their attitudes towards bullying their fellow students.

It depends on whether you have a large enough sample as offering a “DK/NO” will most likely reduce your effective sample size. After all, a part of your respondents will opt for the “DK/NO” and, as a consequence, will most likely not be included in the analysis. The reduced impact in the data will be even stronger for certain socio-demographic groups. It has for instance been shown that lower educated respondents tend to opt for the “DK/NO” far more often than higher educated respondents.

Finally, if you decide to offer a “DK/NO”, you can try to get substantive data from respondents that opted for the “DK/NO” by asking them a follow-up question. You might for instance ask them whether they lean towards one of the substantive response options (Bradburn & Sudman, 1988).

So, how do you deal with the ‘don’t know/no opinion’ answer options in your surveys?

References

Bradburn, N. M. and Sudman, S. (1988), Polls and Surveys: Understanding What They Tell Us. San Francisco: Jossey-Bass.

Feick, L. F. (1989), “Latent Class Analysis of Survey Questions That Include Don’t Know Responses.”, Public Opinion Quarterly, 53: 525–47.

Krosnick, J. A., Holbrook, A. L., et al. (2002), “The impact of ‘no opinion’ response options on data quality. Non-attitude reduction or an invitation to satisfice?”, Public Opinion Quarterly, 66: 371-403.

Krosnick, J. A. (1991), “Response Strategies for Coping with the Cognitive Demands of Attitude Measures in Surveys.”, Applied Cognitive Psychology, 5: 213–36.

Oppenheim, A. N. (1992), Questionnaire Design, Interviewing, and Attitude Measurement. London: Pinter.

Schwarz, N. and Bohner G. (2001), “The Construction of Attitudes.” In Blackwell Handbook of Social Psychology: Intraindividual Processes, ed. Abraham Tesser and Norbert Schwarz, vol. 1, pp. 436–57. Oxford: Blackwell.

17 comments

Join the conversationCapthall - December, 2018

None of the above is the only answer to many survey question…

Rich Williams - December, 2016

Tangentially related … we have a paper/printed satisfaction survey with the first 20 questions having 5 possible responses: Very Satisfied, Satisfied, Dissatisfied, Very Dissatisfied, and NA (not applicable). I see in the example on this page that the DK/NO column is set off by a vertical line and darker shading. Our survey is not like this – the respondent reads the column heading for each of the 5 choices to discern which answer they should mark.

So, this is probably a dumb question, should this column be set off?

Gert Van Dessel - December, 2016

Hi Rich,

this is not absolutely necessary, but it does make it easier for the respondent to fill in.

And the easier the questionnaire, the higher the response rates…

Rich Williams - December, 2016

Thank you Gert!

Abdiel - September, 2016

Thanks for your article! We made a diagnostic test on English language knowledge for our English course. We decided to add a DK/NO option to keep some students from answering questions they really don’t know the answer to. After reading your article, I think our question can be looked at as ‘factual questions’ since they are there just for testing students’ knowledge. Is there any additional opinion on diagnostic tests?

Gert Van Dessel - September, 2016

Abdiel,

I don’t have specific insights on diagnostic tests, maybe one of the other readers of this article?

Nicolas Fernandez Perez - July, 2016

Hi, very interesting subject. If not too late I would like to ask your opinions in the case of a listening test (different from a survey), for example an ABX test, where usually the only two options available for the subject taking the test are either X=A or X=B, where X is randomly chosen between A and B by a machine and samples A and B have very small differences between each other.

What would happen and how would it affect the results of the test, if we include a third option called “I don’t know”?

The key thing here is we are trying to determine whether or not a subject can tell if there is a difference between samples A and B, depending in his/her perception (listening).

Thank you!

Gert Van Dessel - July, 2016

Hi Nicolas,

Thanks for your comment. I am not familiar with this type of testing, but invite other readers of this article to post their experiences with this.

Nate Richey - May, 2015

I used to work in a call center in Los Angeles to take people’s opinions. Our rule of thumb with these is to not read that option, make sure they provide a response, and then probe if it doesn’t fit verbatim. The don’t know, no opinion was necessary for respondents that would give their opinion without understanding they need to choose an option, which was rare. In some cases the survey would let us do that, in other cases, it wouldn’t. But that was a last resort.

Matt Champagne - February, 2014

This is a very nice post and I’m enjoying the conversation. Providing a “Not Applicable” option allows the respondent to prevent you from learning her opinion. The “Not Applicable” option may be fine for some categories, such as questions about a company’s customer service or the a facility’s public restrooms, which the respondent may not have used. In many cases, the respondent has an opinion and we don’t want to lose that information with a “Not Applicable” option. In November of 2013, I wrote a blog post regarding this very topic. Have a look when you or other audience members have a chance: http://www.embeddedassessment.com/Lesson5_Surveys_OptOut

Didier Dierckx - February, 2014

We are also getting some interesting comments in the LinkedIn Group “Market Research Association”

“In general, when there is hard data required we would prefer a best guess response rather than a DK/NO, but will accept a true DK. In the case of opinions or attributes. A DK/NO response should not be provided.”

and …

“The other question is: what does a DK response really mean? In many situations, the answer has multiple interpretation. If the respondent is asked to rate a brand as “being charismatic”, does a DK response mean “I don’t know enough about the brand to answer this” or “I don’t know how the concept of charismatic applies” or “I don’t know what charismatic means”?”

Didier Dierckx - February, 2014

Some interesting comments on LinkedIn in the group ‘Market Research Field Directors/Field Management Pros/Data Collection Vets/On-line & Off-line

Market Research Field Directors/Field Management Pros/Data Collection Vets/On-line & Off-line’

“In our practice, we follow the final recommendation in the article — include DN/NO when there is a reasonable likelihood that not all respondents may be able to provide an answer but exclude the DN/NO option when respondents really ought to have an opinion on a particular topic.”

and

“From an emotional work point of view, ease of working and interview flow, and for data accuracy and respondent sensitivity, I am much more comfortable with an unforced response – the inclusion of DK and REF give the respondent an “Out” option which they may require for any number of reasons. “

Michelle Frietchen - February, 2014

In the example above there already is a neutral option – “Neither Disagree, nor Agree” – if a respondent does not know how they feel, have not experienced that aspect of X or are just “not wanting to think about it” they have an out by selecting the middle of the road response.

I typically include a “Not Applicable” column to the far right if I feel that respondents may not be able to rate a particular question. When I feel that they should be able to have an opinion based on their criteria or experience, I still have NA, but many times do not include a neutral category in the middle of the scale. I do include a Don’t Know if I feel it would apply to the audience.

I agree, it is very frustrating when it truly does not apply and one is being asked to rate something.

Didier Dierckx - February, 2014

Hi Michelle,

Interesting comments

I would argue that you try to separate ‘neutral’ respondents and respondents that have not experienced an aspect or do not want to think about it as much as possible. Being neither dissatisfied, nor satisfied (aka neutral) about a certain service is different from not wanting to think about it or not having experienced the service.

Throwing those three types of respondents on the same pile, would in my opinion muddle the data. How do you feel about this?

Austin Lemar - January, 2014

Alexander, couldn’t you just mark these questions not required’?

Alexander Dobronte - January, 2014

One reason is that many respondents are not so technically savy and miss the signs about what questions are required or not. They may think every question is required or in good faith try to answer every question. If they come to a question they can’t answer, they feel they have two choices:

1. Give a random or neutral response

2. Leave the survey

The first option hurts the quality of your results and makes the respondent less invested in the rest of the survey since they gave what they know to be an invalid response and question the value of their effort.

The second option also hurts since often only completed surveys are included in the analysis.

Alexander Dobronte - January, 2014

Providing a “N/A” option gives a way out of not thinking about the question, but if you leave it out, be absolutely positive respondents can give an answer or else they will leave the survey. A common mistake we see, are surveys that ask respondents to rate a list services from a company which the respondent may or may not have used. If there is no “Don’t know” option and the question is required, then the respondent will either give a random response to continue or more likely stop there.